About object classification

In my second week I started to developed a software for training an object classifier using images. The idea is that using an image as input, for example an image of potatoes the classifier would give the class of the image as output. That is done using Computer Vision and Machine Learning techniques. The Computer Vision part is that the program gets descriptors for the image and the Machine Learning part is that the descriptors are used to train a model that would classify another set of descriptors.For example an article would be classified as sport, political news or other class depending on the words that are used. For example if an article has words like football, goals, points, team, etc. It would probably be an sports article. In that sense, the words are the descriptors of the article and they are different depending of the class they belong. A model is a rule for the set of descriptors that would take them and predict the class that is described by them.

About the program

I created a program on Python and OpenCV that uses ORB local descriptors, VLAD global descriptors and a SVM as classifier. It is free and can be downloaded on Github https://github.com/HenrYxZ/object-classification. To run it just use the command line

python main.py The program will look for a "dataset" directory inside the project folder. Then it will generate a Dataset object with the images found there. The images must be in a folder with the name of it class, for example all the images of potatoes must be in a "potatoes" folder. You don't have to divide the images between training and testing sets as the program will do that automatically with a random selection of 1/3 of the images for testing and the rest for training. Then the Dataset object will be stored in a file so that it can be used later and will have the information of which image is in which set and in which class.

After that the local descriptors for the training set are calculated. That is done using OpenCV functions. This tutorial shows how ORB descriptors are obtained http://opencv-python-tutroals.readthedocs.org/en/latest/py_tutorials/py_feature2d/py_orb/py_orb.html. I resize every image that has more than 640 pixels in one of its sides to have 640 pixels in its biggest size and conserving the aspect ratio to have a similar amount of local descriptors on every image. If an image has too high resolution it may give a lot of local descriptors and it would be difficult to have enough memory to store them all. If the dataset has too many images the program may crash because the computer may not be able to store all the descriptors in RAM memory.

|

| An example of ORB descriptors found in a Cassava root image |

When the local descriptors are ready a codebook is generated using K-Means (The technique is known as Bag of Words). A codebook is a set of vectors that theoretically have more descriptive power for recognizing classes. Those vectors are called codewords. For example a codeword for a car may be a wheel because cars have wheels no matter how different they are. The K-Means algorithm find centers that are representatives of clusters by minimizing the distance between the elements of a cluster to it center. The descriptors are grouped into k number of clusters (k is predefined) randomly at first but then in the groups that minimize distances. So for example for k=128 there are going to be 128 codewords which are vectors obtained by K-Means. A center is an average of descriptors and may have descriptors of different classes in its cluster space.

|

| An example of a codebook with multiple words |

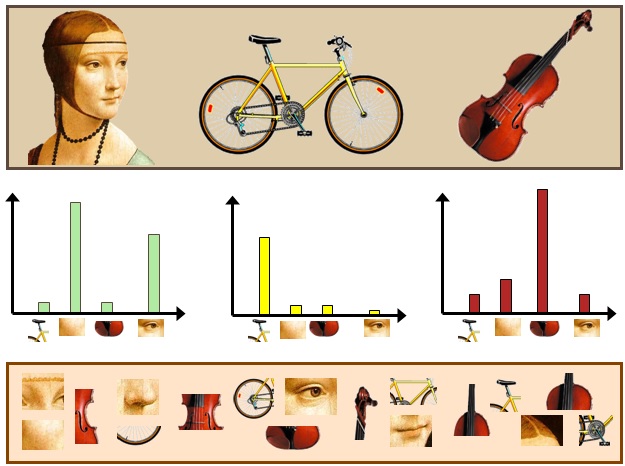

Then for each image there is a global descriptor VLAD (Vector of Locally Aggregated Descriptors). This descriptor uses the local descriptors and the codebook to create one vector for the whole image. For each local descriptor in the image finds the nearest codeword in the codebook and adds the difference between each component of the two vectors to the global vector. The global vector is a vector of length equal to the dimension of the local descriptors multiplied by the number of codewords. In the program after the VLAD vector is calculated there is a square root normalization where every component of the global vector is equal to the square root of the absolute value of the previous component and then a l2 normalization where the vector is divided by its norm. That has had better results.

| VLAD global descriptors |

This global descriptors are given to a Support Vector Machine as input with the labels and it creates a model for the training set. I have used a Linear kernel but it's possible to use other kernels like RBF and maybe get better results. What the SVM does is to find decision lines or equations that are going to divide groups of vectors into one class or another. And it does that by maximizing the separation between decision lines and the minimizing the distance to the vectors of the same class. OpenCV comes with a SVM class that has a train_auto function that automatically selects the best parameters for the machine, and is what I use in the program.

After the SVM is trained the VLAD vectors of the testing images are calculated in the same way as in training and the global vectors are used with the SVM to predict their classes. Then the accuracy in the prediction is obtained by dividing the number of images that where correctly classified by the total number of images.

No hay comentarios.:

Publicar un comentario