In my fifth week I thought it was time to give the classifier something harder, so I added two more classes to the dataset. The classes were

tomato and

wheat. I thought that one application for the plant recognition could be that in the future robots would help farmers. They would have a semantic knowledge of plants so they would act different depending on that. For example if it’s wheat would give it some kind of fertilizer different that if it’s a tomato plant.

|

| Training image of the wheat class |

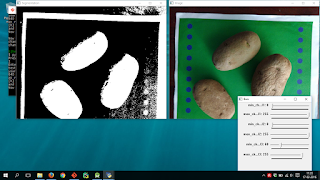

Then I trained with the new dataset and obtained bad results, around 60% of accuracy. To try to understand what was going on I implemented a confusion matrix, so that I could see specifically which classes were giving the biggest errors. I realized that classes like potato that had many images and descriptors did very good in testing but classes like cassava with fewer images and fewer descriptors. So probably the problem was that during K-Means the centers generated favor the classes that had more descriptors.

To fix this problem I decided to use the same amount of training and testing images for each class. So I selected 55 images for training and 30 for testing for each class in the dataset. I also changed the structure that the dataset had to have; now the training was separated from the testing image files in different folders inside the class, and now it wasn't necessary to store a dataset object, since the selection of the sets was fixed by where the image was.

Results with 5 classes

ORB Features

k = 32

Time for getting all the local descriptors of the training images was 00:00:02.476804.

Time for generating the codebook with k-means was 00:00:04.964310.

Time for getting VLAD global descriptors of the training images was 00:00:12.084776.

Time for calculating the SVM was 00:00:23.850120.

Time for getting VLAD global descriptors of the testing images was 00:00:06.300698.

Elapsed time predicting the testing set is 00:00:00.034392

Accuracy = 64.0.

Classes = ['cassava', 'pinto bean', 'tomato', 'wheat', 'potato']

Classes Local Descriptors Counts = [24507, 25720, 24192, 25232, 22500]

Confusion Matrix =

[20 2 2 4 2]

[ 3 17 1 7 2]

[ 5 3 14 4 4]

[ 2 0 1 26 1]

[ 5 2 2 2 19]

k = 64

Time for generating the codebook with k-means was 00:00:09.258671.

Time for getting VLAD global descriptors of the training images was 00:00:17.179350.

Time for calculating the SVM was 00:00:46.991844.

Time for getting VLAD global descriptors of the testing images was 00:00:08.864136.

Elapsed time predicting the testing set is 00:00:00.065617

Accuracy = 65.3333333333.

Confusion Matrix =

[19 3 6 1 1]

[ 4 17 2 5 2]

[ 4 3 15 6 2]

[ 1 1 0 28 0]

[ 4 3 3 1 19]

k = 128

Time for generating the codebook with k-means was 00:00:17.634565.

Time for getting VLAD global descriptors of the training images was 00:00:27.555537.

Time for calculating the SVM was 00:01:34.641161.

Time for getting VLAD global descriptors of the testing images was 00:00:14.336687.

Elapsed time predicting the testing set is 00:00:00.183201

Accuracy = 64.0.

Confusion Matrix =

[21 2 3 1 3]

[ 5 13 3 7 2]

[ 4 4 14 5 3]

[ 3 0 0 27 0]

[ 2 4 2 1 21]

k = 256

Time for generating the codebook with k-means was 00:00:35.860902.

Time for getting VLAD global descriptors of the training images was 00:00:52.757244.

Time for calculating the SVM was 00:03:32.905707.

Time for getting VLAD global descriptors of the testing images was 00:00:26.958474.

Elapsed time predicting the testing set is 00:00:00.383407

Accuracy = 62.6666666667.

Confusion Matrix =

[19 4 3 2 2]

[ 5 15 2 6 2]

[ 2 2 16 6 4]

[ 2 0 0 28 0]

[ 6 3 3 2 16]

SIFT Features

k = 32

Time for getting all the local descriptors of the training images was 00:00:31.042207.

Time for generating the codebook with k-means was 00:00:28.069662.

Time for getting VLAD global descriptors of the training images was 00:01:56.548077.

Time for calculating the SVM was 00:01:23.322534.

Time for getting VLAD global descriptors of the testing images was 00:00:57.089969.

Elapsed time predicting the testing set is 00:00:00.142989

Accuracy = 79.3333333333.

Classes = ['cassava', 'pinto bean', 'tomato', 'wheat', 'potato']

Classes Local Descriptors Counts = [73995, 79464, 38025, 86212, 28823]

Confusion Matrix =

[22 2 3 0 3]

[ 0 24 2 1 3]

[ 0 1 23 3 3]

[ 0 0 0 30 0]

[ 1 6 1 2 20]

k = 64

Time for generating the codebook with k-means was 00:00:56.495541.

Time for getting VLAD global descriptors of the training images was 00:02:20.884382.

Time for calculating the SVM was 00:03:10.961254.

Time for getting VLAD global descriptors of the testing images was 00:01:07.280931.

Elapsed time predicting the testing set is 00:00:00.338773

Accuracy = 78.6666666667.

Confusion Matrix =

[23 1 3 0 3]

[ 1 24 2 1 2]

[ 0 0 25 2 3]

[ 0 0 1 29 0]

[ 3 7 1 2 17]

k = 128

Time for generating the codebook with k-means was 00:48:26.116957.

Time for getting VLAD global descriptors of the training images was 00:03:2.914655.

Time for calculating the SVM was 00:06:52.834055.

Time for getting VLAD global descriptors of the testing images was 01:32:33.834146.

Elapsed time predicting the testing set is 00:00:0.620425

Accuracy = 77.3333333333.

Confusion Matrix =

[23 1 3 0 3]

[ 1 23 3 1 2]

[ 0 1 25 1 3]

[ 0 0 1 29 0]

[ 1 9 3 1 16]

k = 256

Time for generating the codebook with k-means was 00:08:52.935980.

Time for getting VLAD global descriptors of the training images was 00:10:37.489346.

Time for calculating the SVM was 03:08:49.511758.

Time for getting VLAD global descriptors of the testing images was 00:05:28.551197.

Elapsed time predicting the testing set is 00:00:02.722835

Accuracy = 79.3333333333.

Confusion Matrix =

[25 2 1 0 2]

[ 0 25 2 1 2]

[ 1 0 22 3 4]

[ 0 0 0 30 0]

[ 2 7 2 2 17]

Conclusion

I added more classes to the dataset to see how the classification performance would change. One of the important things I realized was that it's necessary to have similar amount of descriptors for each class if you are going to use K-Means (in our case we use it for a codebook from the Bag of Words technique). The best result went from 89.77% of accuracy to 79.33% but with different number of testing images for each case. It is also necessary to use always the same amount of testing images so that the comparison of the performances are more fair.

|

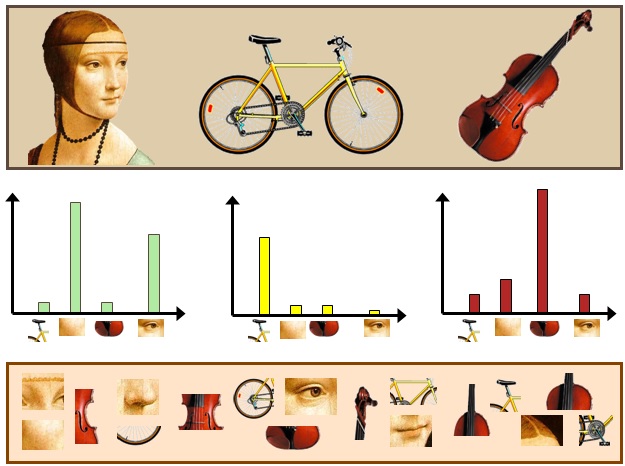

| Confusion matrix for the best result obtained (SIFT k=32) |

Looking in the Android Play Store I found applications that claimed to be able to recognize between 20.000 classes of plants. Using my method it would be very difficult to have that amount of classes.

First, it would need a lot of memory to store all the descriptors for each class. Using around 50 training images per class there would be about 20.000 descriptors per class and that's 400 M (million) vectors. Each vector uses at least 32 floating points number (of 32 bit = 4 bytes). So the data used for all the descriptors would be 32 x 4 bytes x 400 M = 51,200 M of bytes =51.2 x 10^9 bytes ~ 47.8 GB of RAM.

Second, it would take a lot of time to use K-Means for 400M vectors and to calculate an SVM for 20.000 classes usings 20.000 * 50 (number of training images) VLAD vectors.

Third, adding only 2 more classes there was a drop of 10% in the accuracy. Having more classes would make the codewords have less discriminating power because there would be many features and may be more similar from each other than with less classes.

When I looked at the reviews of that application many people complained about that the recognition didn't work. Someone said that even one of the most common plants wasn't recognized. Maybe it seems too difficult to have many possible classes, but now companies like Google have achieved very good performance with image recognition, for example in the Google Photos app one can search images using many categories like dog, beach, cascade, food, etc. So having robots that help in farming is possible and that could help feeding the growing population.